How does your data make you feel, or even the word data, the idea of data?

In too many cases, it comes with a stab of anxiety, a feeling of weight, even dread. For years, we've built miraculous things based on what we could do with the technology we've had. But that's been limiting, and in order to put everything back together at the end of the day, we've multiplied complexity upon complexity, and we've paid the costs in both finances and in human capital.

We have data for things because we care about them, and the things that we care about and interact with regularly are things that we should be able to feel natural and comfortable and fluent about. With that in mind, I am here to describe a new way of thinking about data and information, which I call a holograph. In this case, because it supports all the features required for business use, I'm calling it an enterprise holograph.

The purpose of this inquiry is to discover and describe a human centric, flexible, and evolvable approach to information based on the processes of our own intelligence. but also maintaining high ethical and technical data standards.

In addition to being human centric, it embraces the data centric paradigm, including important principles like:

The instructions and objects in this system are written in language that both humans and machines can understand.

That data belongs to you and is portable and reusable, not locked away inside a platform.

The work you put into building this and the data you put into it is widely useful, and not just to yourself, but also developers and partners, which makes future projects, even moving between platforms, orders of magnitude easier and cheaper.

Compound Organisms, Common Language

We think of ourselves as individuals. And indeed, the question of identity is at the heart of this new paradigm of semantic graph data.

We are compound organisms though. Most of the genetic information that makes us and keeps us working comes from the microbes and the viruses that we contain rather than our own DNA. And what lets all those parts work together is a shared language of amino acids and proteins.

In the same way, a holograph is not a big clockwork monolith or a Rube Goldberg device, but an information ecosystem held together by shared language.

Integrity and Intelligence

At the heart of the matter is something that feels like a contradiction, or at least a tension. Data is physical in the sense that it has an existence, it's written down, it's discrete. We're responsible for it. We're past the days where we're not. when it was acceptable not to keep track of where it came from or when we got it. Now we have to be able to remove erroneous data or worse toxic data without breaking the system. These are our ethical and even legal requirements.

Concept formation though, the world of ideas and abstractios, is different. As we build the system and name things and describe the parts, we're going to be wrong. It's, it's just going to happen. It's part of the process. And we're going to learn to see things more clearly and get better ways of understanding things. That's the kind of behavior that this technology needs to support.

Previous data paradigms that make structural transformation difficult are discouraging us from doing the most important and most consequential things. The power of concepts is that they can be reused, extended, and even change in different contexts, and as with the data side, without violating the integrity of the system.

What is a Holograph?

It's not a product, it's an idea or a set of ideas that can be used to build a coherent, harmonized system that has both flexibility and integrity.

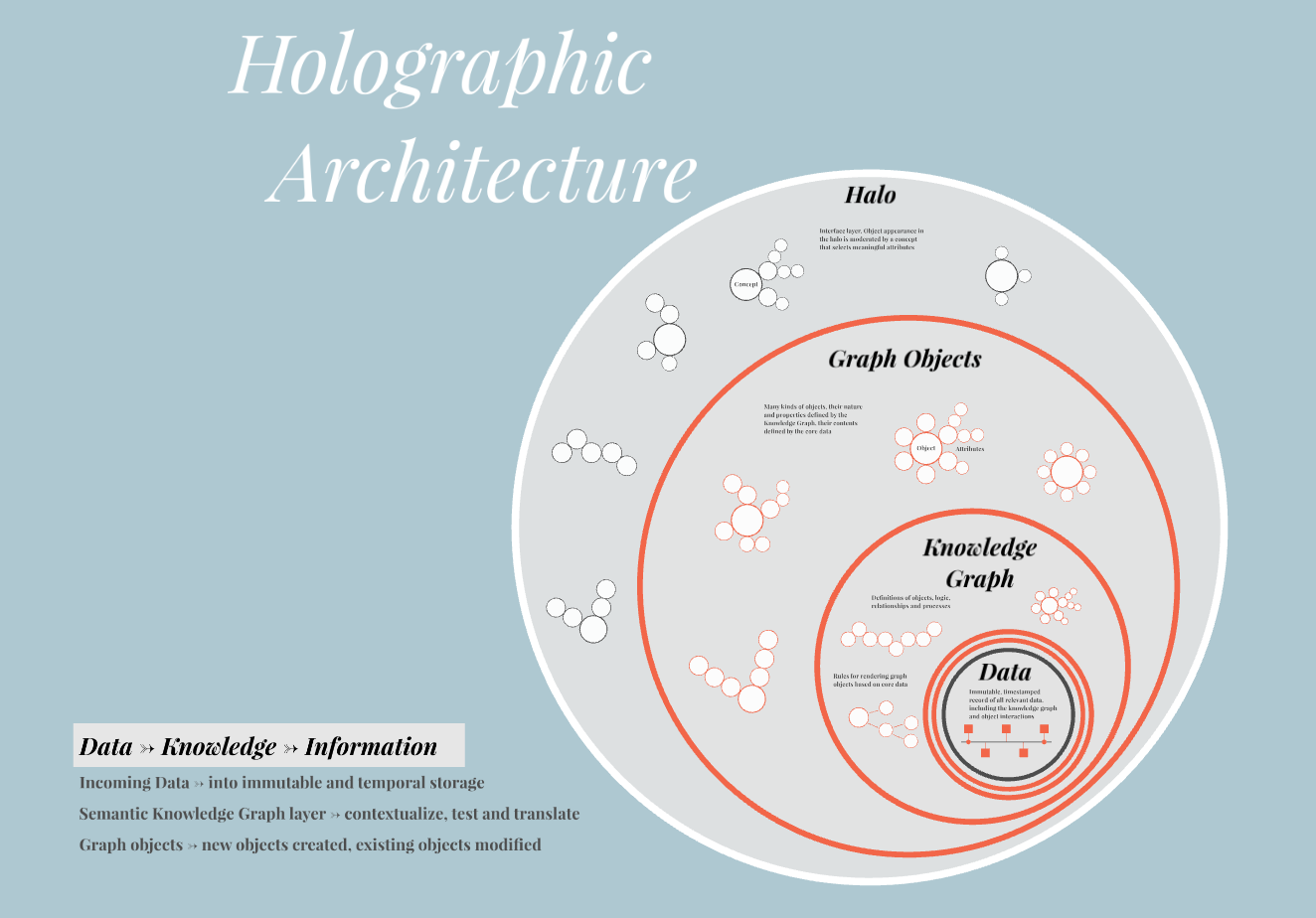

At the core of the holograph is a data repository, a permanent record in which both raw and semantic and structural data are saved with notes about where they came from, when changes were made, when we acquired them. This is the part that is auditable and immutable in the sense that it captures all the changes that have been made in objects and in structures with time.

That data core is surrounded by a knowledge graph layer, not a single knowledge graph, this compound organism of subgraphs that describe objects, rules of logic, relationships, processes, um, ways of validating information.

And the structures in the knowledge graph render that semantic data into objects, into this world of graph objects, in which all kinds of different things exist as things that we understand, things we care about, things we give a name and attach named attributes to. There are many, many attributes for all these objects though, more than we would ever really want to look at.

So most of our interaction with them happens through this halo lens* that surrounds the whole thing. That is a, a part of the whole thing in that even the concepts here are defined by subgraphs. But in this case, rather than looking at a customer and seeing everything we could ever possibly know, we use a concept that says customer in a particular context and has only a few important surface features. (Note: after some discussion and thought, I realize that the similarity between ‘Halo’ and ‘Holo’ is confusing. ‘Lens’ is more consistent with the idea of holographic images, and it affects what you can see inside the holograph, but doesn’t really change the nature of it.)

There's a common formulation that goes from data, to information, to knowledge, and then perhaps to wisdom. This is the tooling version of that, where knowledge structures of semantic defined knowledge translate data into information, into objects in that world of graph objects.

Many Species of Subgraph

The thing that makes all of these things harmonious, that makes it a holograph, is that all the moving parts are made of the same thing, structures called “subgraphs.” This is like the DNA and the proteins. They're semantic structures that you could print out and read and with a little help, understand what's going on, or give to someone else who can then understand all the fundamental parts and principles you're talking about.

How Does it Work?

We've made a lot of progress over the years in describing complex systems as knowledge graphs, but the question always seems to arise of what it looks like when there's actual data in the system. When you build something with it, have you seen it in the wild? Does it really work? Purpose of this holograph is to answer that question and make something that works at the level of individual bits of data. With that in mind, I’ve put together three basic scenarios to describe how data and information move through the whole.

1. Inbound Data

In the first case, data comes in from the outside world. It might be from a website or from a piece of connected equipment, and it enters via a point that again is defined by a semantic subgraph. The entry point that says when data comes in here, register where it came from and when we got it, and then write that data into the core with a timestamp.

From there, it bounces against a data contract, again, a semantic subgraph that contains the rules to evaluate whether the data is acceptable and also manipulations required to get it into the semantic language of the whole system. So it's saved both in its native version and then saved again as semantic data.

Which passes through, again, another subgraph, in this case, one that describes an object, like Customer, from where it will create a new object or modify properties of an existing object.

2. User Edit

In the case of a user operating in an interface at the level of the Halo Lens, someone who opens a form to change the name on a record.

That information will again be bumped against the same data contract subgraph to make sure it's valid even before it's submitted and saved. If it passes that again it's written into the core as data, the raw version. It's pushed through the definition of the object that it affects where it's interpreted as semantic data and saved and then also rendered out in the world as a change or a new object.

3. Builder Edit

The third case study is the one that really unlocks the flexibility and potential that we connect with concept formation and developing expertise. And that is a situation where someone at the level of a builder makes a change to the underlying knowledge graph, to the structures that make the graph objects exist in the world.

Fortunately, when you make a change like that, it can have vast ramifications, but this process allows you to test those locally, to take this data core that you know is reliable, you're not going to break, to plug it into your new version of the knowledge graph layer, and run a simulation that renders all the data and see what breaks, what doesn't fit, what dependencies you might be breaking or violating, and lets you resolve all those things as well as giving you a platform for review and approval.

Assuming that you've found some problems and fixed them and revised it and gotten a friend to sign off, the changes are then written into the knowledge graph, again, into the subgraphs, which are made of words that you can understand and structures that you can read and that machines can read. The knowledge graph itself, that sub graph, is saved in the data core as a version.

So if you had to go back to yesterday's, yesterday's is still there. In addition, as part of this process of pushing it back out to the world, the semantic data that might be affected is updated in the core as well. It's not replaced. So again, you could go back to yesterday's data and see what changed when.

When that happens, though, there's a chance that objects out in the world will have to be recreated. So, at whatever scope the change requires, the system will re render objects in the world with their new properties, and presumably will notify other users or other connected systems that there's been a change at the level of architecture, and they should keep an eye out for any possible consequences.

The Power of Concepts

The inspiration for this model came from the way we form concepts and build expertise. Graph objects in this world have surfaces, visible attributes that we can see in the process of understanding. And using these objects requires us to decide which of those innumerable surface level features to rely on as clues.

Experts are defined by their ability to know which of these features matter, and to associate some of those surface features with hidden traits, perhaps, while novices can't easily differentiate, and may not even be aware that there are hidden traits. If you've ever looked at a foreign data scheme or object and seen a whole big list of things that relate to it without a sense of what most of them mean and when they're important, that's the novice experience of looking at an object.

What expertise does, what the development of concepts and knowledge does for us, is that by seeing things that are hidden to novices and perceiving clues that might otherwise go up, go unseen, and then giving those concepts names, bringing them up to the surface, coding them into the knowledge graph layer, they become visible to people who may not have that level of expertise and usable for problem solving scenarios.

Here’s another quick scenario from my own experience in the realm of marketing data. We have the object of the customer, with a whole bunch of associated attributes, but that's just too much to look at. So we have a concept or a view of the purchase history that shows just the customer and what they bought.

And we have a search pattern, a query that finds people who've made a purchase less than two weeks and hadn't bought anything in the 10 months previous. Putting those together returns a form of objects that fit that pattern. Incidentally, there is also an interface philosophy I’m working on describing to help us make sense of those.

You can sort of see how a bunch of different objects, a bunch of different customers and their own histories show up as a result of the search and give us ways to relate them and arrange them, and in this case, to look even more deeply with expertise and realize that these people are making purchases in the two weeks before their child's birthday.

Perhaps the idea of a parent whose kid is about to have a birthday becomes a concept that can be registered in the enterprise and can be used by people, used by marketing teams, used anywhere it could be helpful. That's the power of concepts and developing expertise.

Integration and Interoperability

Part of the promise of semantics and knowledge graphs in this realm is that they allow integration and interoperability. The objects in these holographs, because they're made from the same underlying language and the same conventions and the same human and machine understandable logic and language, they can interact with each other. And even across domains and different kinds of objects, patterns and relationships can be determined that might otherwise have gone unseen.

As well, they allow integration with outside structures of information. The promise of the semantic web assumes a sort of fixed language that all websites share. Research communities share different sets of terminology and data standards around biology, around proteins and genes. With a holograph, all it takes is the right subgraph to translate incoming and outgoing information to make it possible to publish your information in a way the world can understand, or to take in information that people out in the world have published using the same standards.

Incremental Adoption

Facing a data transformation project can be daunting. The idea of building a model that captures every last detail of your organization in semantic logic and data can seem imposing enough to be prohibitive.

Something on that grand scale is possible, the technology and the model support that, but it's also very well suited to starting small. If you build a “logical twin” of a particular project or process or domain, the conventions and the approaches that you establish as part of the process will expand and extend throughout future projects and throughout the rest of the organization. This can emerge as a set of holographs (or holographs within multiple domains) that operate as separate data products, but are also interoperable. They can be connected to each other, even moved from one domain to another. Insights that we find in one realm may be are useful in another, so starting with clear questions and conversations that establish what we know about what we care about, and what we know about how it behaves, will settle many of the structural questions that can lead into a radical and widespread data transformation.

You can start small. It's something that you can build quickly, that you can build in an emergent situation like a new outbreak of wildfires or disease or revolutionary new technology. The fact that everything in it is clear and connected doesn't mean that it's large and complicated. It means that it can handle things that are large and complicated.

Complex Situations Demand Familiar Analogies

The power of concepts and abstraction lets us see complex situations as analogies, so I'll give you one last analogy. In this case it describes the system through analogy to fishing, but the same thing, solving problems by familiar analogy, can also be brought to bear on the things in the system.

The core of data is the solid ground under your feet. The knowledge graph layer describes what you know about, fish, and lures and equipment, bodies of water, weather formations, etc. It's the rules of the game of the world around you.

That world is the world of the graph objects, all the fish, the individual lures in your tackle box, all the temperature gradients and the currents and the weather overhead.

Looking through the lens of the halo makes it possible to see through the water, to see every level of the water table, to see and identify the fish, to see where in the current where they might hang out and feed.

That sensation, being able to see deeply into something you care about and knowing what to do with it, that's what your data should feel like: natural ease, fluency, and mastery.